Newprosoft gives me a competitive edge. And I don't have to be a programmer to use it. Web Contect Extractor (WCE) goes out and gets me information and data not available to my competitors. I tried 3 different scrapers before finding WCE. I wanted simplicity but it has to work and get me only the data I wanted. Visual Web Ripper is like most others... they ask the user to look at the page and drag/drop or manipulate the software. Visual setups can work Okay, but WCE uses a visual pane so you can see the page, but you have manual options to check the code under each page right beside the page. Checking boxes is better and more precise for me. Newprosoft has included sophisticated scripting to get exactly the data you want... but you don't have to write a single line of code or know anything about code. Its check, point, click and it makes more sense that all the other options. The best part is Michaels heroic support. No worries about support after purchase with these guys.

Great product. Great company. Great people!

Who is this for: developers who are proficient at programming to.

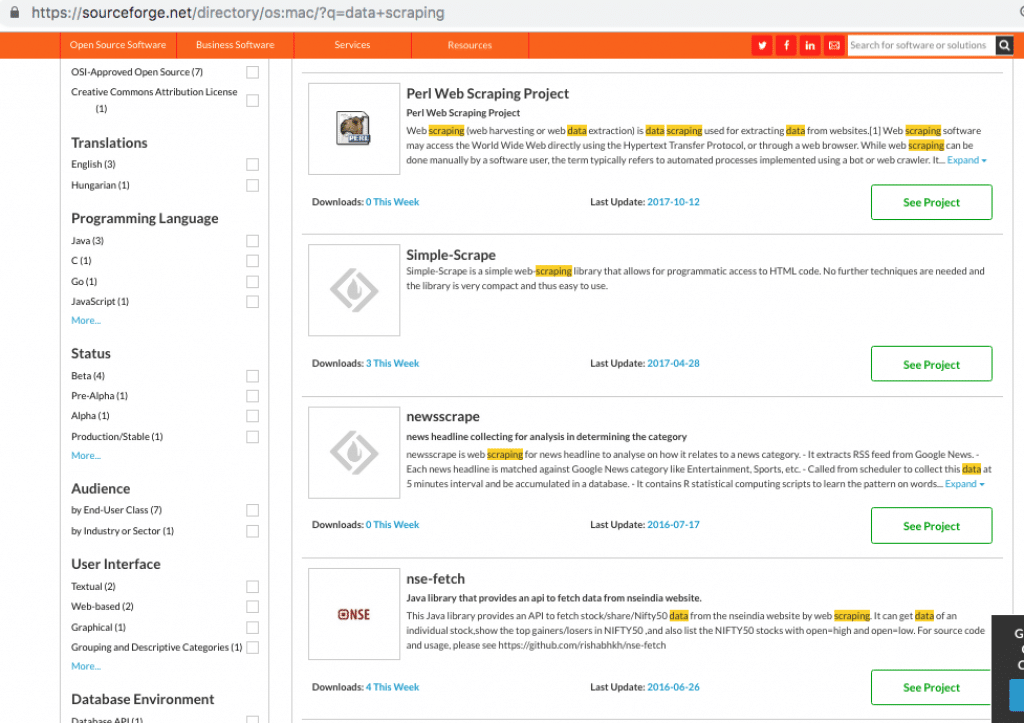

Web Scraping Software Reddit

Web Scraping Software Free

- The current version of WebHarvy Web Scraping Software allows you to save the extracted data as an Excel, XML, CSV, JSON or TSV file. You can also export the scraped data to an SQL database.

- It is the only web scraping software scraping.pro gives 5 out of 5 stars on their Web Scraper Test Drive evaluations. Learn more about Content Grabber Content Grabber Enterprise is the perfect solution for reliable, large-scale, legally compliant web data extraction operations.

- This article compiles a list of the 30 most popular and free web scraping software around the globe in 2021. They can help you turn unstructured online data into structured data that can be stored in your local computer or a database.

- While web scraping can be done manually by a software user, the term typically refers to automated processes implemented using a bot or web crawler. It is a form of copying in which specific data is gathered and copied from the web, typically into a central local database or spreadsheet, for later retrieval or analysis.